From DORA to collab.dev: Evolving Development Metrics for the AI Age

As AI reshapes software development, traditional DORA metrics need to evolve. collab.dev introduces comprehensive metrics designed for the collaborative nature of modern human-AI development workflows.

DORA metrics have been a longtime industry standard for measuring performance in the software delivery lifecycle. The four key metrics have provided teams with valuable insights into delivery efficiency and stability. But, as AI and automation become integral to development workflows, these traditional metrics no longer provide a complete picture of development performance.

Understanding DORA Metrics

DORA metrics provide a framework for measuring software delivery performance through four key indicators:

DORA Metrics framework visualizing the four key indicators. Image credit: GITS Apps Insight

| Metric | Description | What It Measures | Why It Matters |

|---|---|---|---|

| Deployment Frequency | How often code is deployed to production | Deployment cadence | Higher frequency indicates more efficient delivery processes |

| Lead Time for Changes | Time from commit to deployment | Process efficiency | Shorter lead times indicate better workflow optimization |

| Change Failure Rate | Percentage of deployments causing failures | Quality control | Lower rates indicate more reliable delivery processes |

| Time to Restore Service | Time to recover from failures | System resilience | Shorter recovery times indicate better incident response |

These metrics serve as both leading indicators for organizational performance and lagging indicators for software development practices. They work best when applied to individual applications or services, as comparing metrics across vastly different systems can be misleading.

While these metrics remain relevant, they fail to capture the complexity of how complex and collaborative the software development process is. This is even more the case in modern development teams where humans work with AI agents and automation tools. Teams now require metrics that reflect the collaborative nature of human-AI development workflows.

collab.dev: Metrics for Modern Development

collab.dev introduces a comprehensive set of metrics focused on understanding and optimizing the collaborative aspects of software development. It analyzes the last 100 merged pull requests per repository, capturing key events throughout the PR lifecycle.

| Metric | Description | Purpose |

|---|---|---|

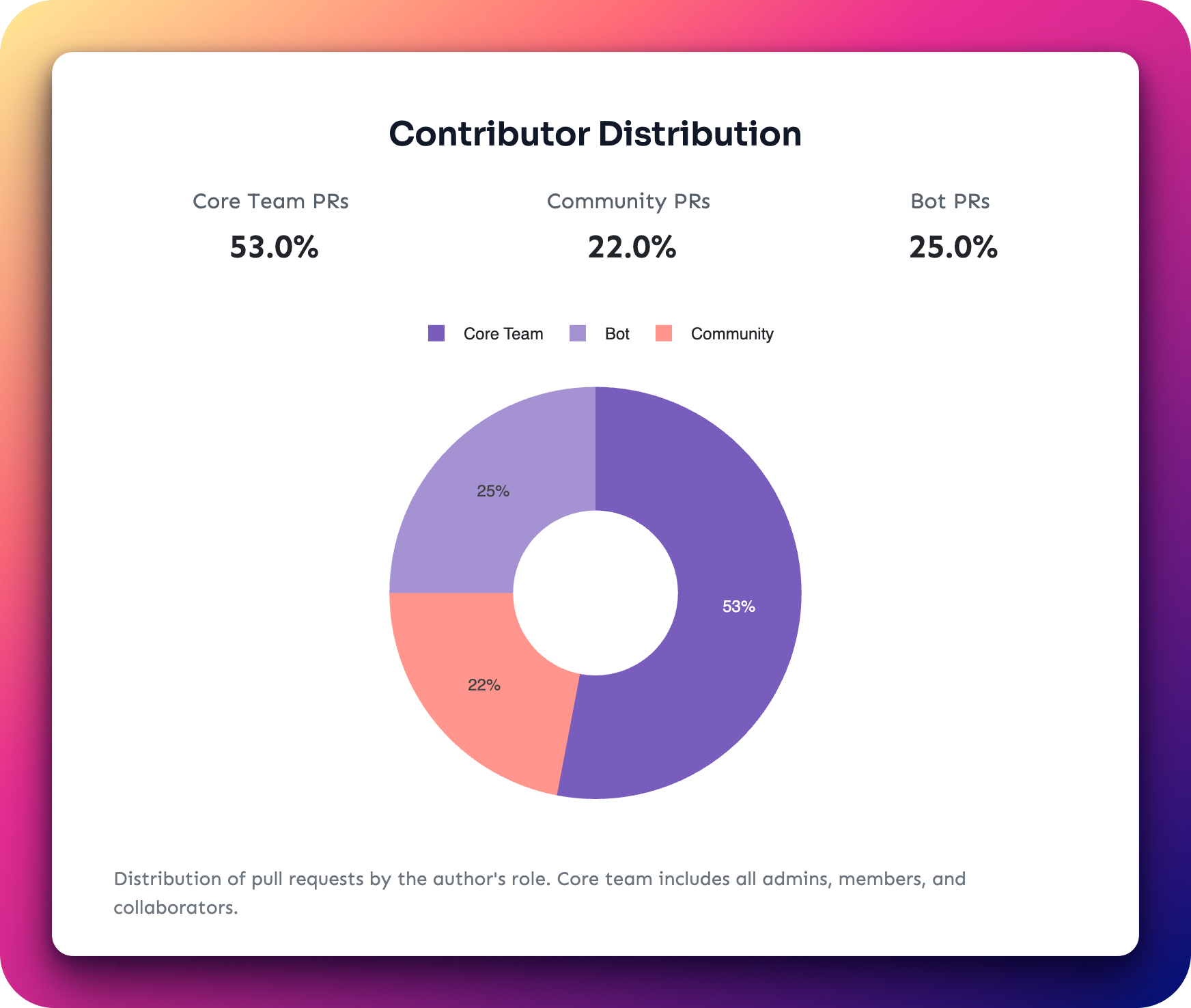

| Contributor Distribution | Categorizes PRs by origin (Core Team, Community, Bots) | Measure community engagement and automation impact |

| Bot Activity Analysis | Tracks bot contributions and activity types | Evaluate automation effectiveness and balance |

| Review Rate | Percentage of PRs receiving reviews | Monitor review coverage |

| Approval Rate | Proportion of reviewed PRs that get approved | Track review effectiveness |

| Review-Merge Coverage | PRs merged with proper review | Ensure quality control |

| Review Turnaround | Time to first review | Measure review responsiveness |

Svelte Contributor Distribution

| Metric | Description | Purpose |

|---|---|---|

| Approval Time | Duration from review request to approval | Track review efficiency |

| Merge Time | Time from PR creation to merge | Monitor overall process speed |

| Wait Time Analysis | Delays in different review stages | Identify bottlenecks |

| PR Progression | Movement through review stages | Visualize process flow |

| Bottleneck Detection | Process inefficiencies | Optimize collaboration patterns |

Request Approval Time for Vercel AI

These metrics provide critical visibility into aspects of development that DORA metrics cannot address, particularly around collaboration quality, automation impact, and review process efficiency.

Looking Forward

Development practices continue to evolve with AI and automation, necessitating corresponding evolution in metrics. While DORA metrics remain valuable for measuring deployment performance, collab.dev provides a more comprehensive view of modern development workflows. By combining traditional deployment metrics with detailed collaboration analysis, teams can better understand and optimize their development processes in the age of AI.

For more information about how collab.dev can help your team measure and improve collaboration effectiveness, visit collab.dev.