From Chains to Protocols: The Journey to MCP and What It Solves

The evolution from prompt hacking to the Model Context Protocol (MCP) represents a leap forward in AI tooling. Learn how MCP solved fragmentation, improved security, and made AI tools truly reusable across frameworks.

When LLMs first started “calling tools,” we were just grateful it kind of worked.

You’d stuff a prompt with instructions, coax a structured response, and hope your parser could make sense of the result.

It was duct tape, but it moved.

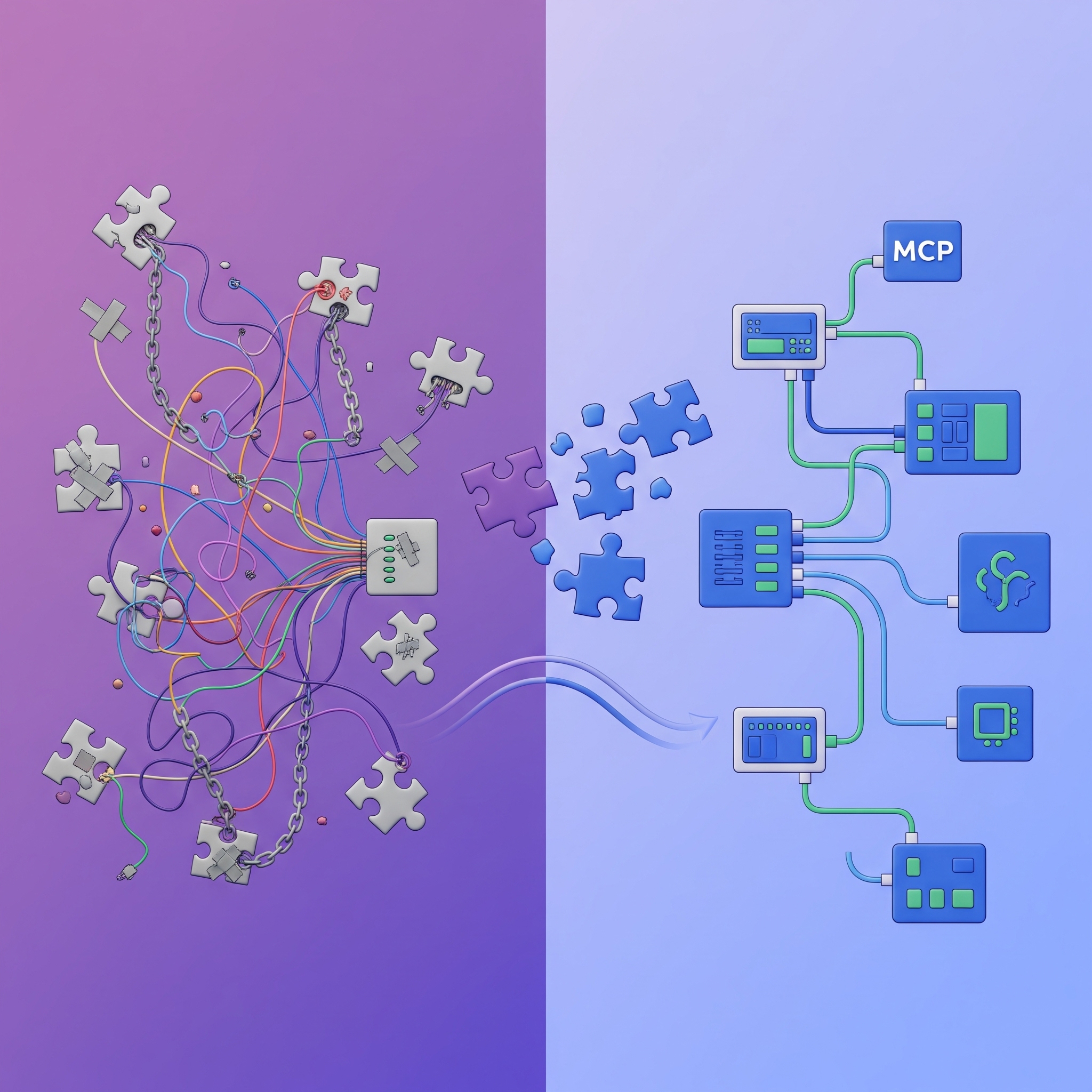

As workflows got more complex (multi-step agents, toolchains, retries), the cracks widened. Everyone rolled their own format. Nothing was portable. Observability was a guessing game.

Enter the Model Context Protocol (MCP): a standardized, open way for models to discover and safely invoke tools.

I’ve noticed lots of “I built X with MCP” content, but not enough reflection on how we got here. We’re jumping straight to the “what” without appreciating the “why”: the messy journey from prompt hacking to standardized protocols.

This isn’t just another MCP tutorial; it’s the story of why we needed MCP in the first place, and how far we’ve actually come.

🛠️ The Early Days: Prompt Hacking & Tool Mayhem

Back in the early days of tool use, most setups looked like this:

- Ask the model to output JSON

- Parse it manually

- Call a function if things looked okay

If it broke, you added more retries. If the model hallucinated a key name, you updated the prompt. It worked… until it didn’t.

We had one project where a tool’s schema lived in the prompt, the parser logic was a one-off regex, and the “tool call” was just a function buried in some Python file. Debugging was pure archaeology.

LangChain helped by introducing chains (structured flows of prompts, tools, and outputs). But tools were deeply tied to the framework. You couldn’t easily reuse or share them across projects.

There was no common format. No clean way to say “here’s what this tool expects” or “here’s how to call it.” We were all reinventing the same wiring, over and over.

🔄 Then Came the Agents (But Tools Still Lagged Behind)

As orchestration got smarter, frameworks like LangGraph, AutoGen, and CrewAI introduced agent runtimes that let LLMs reason across multiple steps, retry failed tools, or ask follow-up questions.

That was a huge leap forward.

But tooling was still fragmented:

- Every framework had its own way of defining and calling tools

- Inputs/outputs weren’t always structured

- Security? Mostly vibes

We ran into issues like:

- A model calling a tool with an invalid payload and no validation in place

- Logs showing tools ran, but we couldn’t tell what inputs were passed

- Struggling to port tools from LangChain to LangGraph without rewriting everything

Even with better agents, tools were the weak link.

📦 Enter MCP: A Standard for LLM Tooling

In late 2024, Anthropic introduced MCP: the Model Context Protocol.

It’s simple but powerful:

- Tools declare a JSON Schema (inputs, outputs, description)

- Expose a

/mcpendpoint (HTTP or stdio) - Any LLM agent can query that endpoint, understand the tool, and invoke it

It flips the model: tools become first-class, self-describing, reusable components. You no longer need to tie tools to a specific framework or prompt structure.

It’s already supported across:

- LangChain (via

langchain-mcp-adapters) - LangGraph (which uses MCP behind the scenes)

- Claude Desktop, OpenDevin, and others

You can host tools on your own server, publish them internally, or compose multiple MCP tools into more complex flows.

🔗 How MCP Fits Into the Ecosystem

Here’s how it all comes together:

+---------------------+| LangGraph Agent || (Or any MCP client)|+---------+-----------+ | v+---------------------+| MCP Tool Call | --> POST /mcp/invoke| (Standard JSON) | --> Schema-based validation+---------------------+ | v+---------------------+| Your Tool Logic || (any language/API) |+---------------------+- LangGraph = orchestration engine (decides what to do)

- MCP = the protocol layer (how to call tools)

- Your Tools = reusable endpoints, portable across projects

✅ Real-World Wins from MCP

Since switching to MCP for our own agents, we’ve noticed big improvements:

Better reuse

One tool, one schema: used across multiple agents and flows.

Debuggable

Clear logs of input/output for every tool call. Easier to trace issues.

Safe by default

Schema validation guards against malformed calls. Authorization headers can be required. Tools can’t be “hallucinated” into existence.

Composable

Tools can call other tools, or be orchestrated in parallel. No special wrappers needed.

🚧 6. What’s Still Evolving

MCP is still early, and some parts of the ecosystem are catching up.

Tool discovery

There’s no universal registry (yet). Sharing tools still happens team by team.

Versioning & compatibility

Schemas evolve. Backwards compatibility isn’t solved out of the box.

Governance

Who can call which tool? From where?

Protocols like ETDI and projects like MCP Guardian are starting to address this with runtime policies and WAFs.

🧠 Final Takeaways

- Tool use with LLMs started as a hack, then grew into a tangled web of framework glue

- Agent runtimes improved orchestration, but left tooling inconsistent and insecure

- MCP offers a clean, open standard that finally decouples tools from frameworks

- The result? Reusable, debuggable, secure tooling at scale

We’re just at the beginning of the MCP ecosystem. But it’s already reshaping how we think about AI agents, tools, and collaboration.