Vibe Coding: Why Microservices Are Cool Again

The surprising synergy between LLM code-generation and modular architecture - why microservices are making a comeback in the age of AI-assisted development.

The surprising synergy between LLM code-generation and modular architecture

The Rise of Vibe Coding

Somewhere between autocomplete and AGI, a new term entered the developer lexicon: vibe coding — the act of building software by prompting an LLM and iterating in flow.

Coined by Andrej Karpathy, it evokes that jazz-like rhythm of:

“You prompt, it codes, you tweak, it gets better — you vibe.”

But not everyone’s vibing.

Andrew Ng recently called the term “unfortunate,” warning it trivializes the deep, focused labor of AI-assisted engineering. Hacker News lit up with takes ranging from “this is the future” to “this is how the future explodes in prod.”

So… is vibe coding real? Yes. But it only works when the architecture supports it. And that’s where microservices make a surprise comeback.

Why Monoliths Kill the Vibe

Large monolithic codebases — whether human-crafted or LLM-generated — are notoriously hard to work with. Not because they’re morally wrong. Because they’re cognitively dense.

As monoliths grow, they create a degradation spiral. The cognitive overhead of understanding the entire system becomes untenable, so developers resort to localized fixes: “I’ll just make this part work.” These tactical shortcuts accumulate as technical debt, introducing inconsistent patterns, tighter coupling, and architectural drift. The codebase becomes progressively harder to reason about, encouraging more shortcuts — a feedback loop that compounds complexity exponentially.

For humans, monoliths require tribal knowledge and cautious refactoring.

For LLMs, they stretch the limits of context windows and dilute the model’s ability to make accurate predictions.

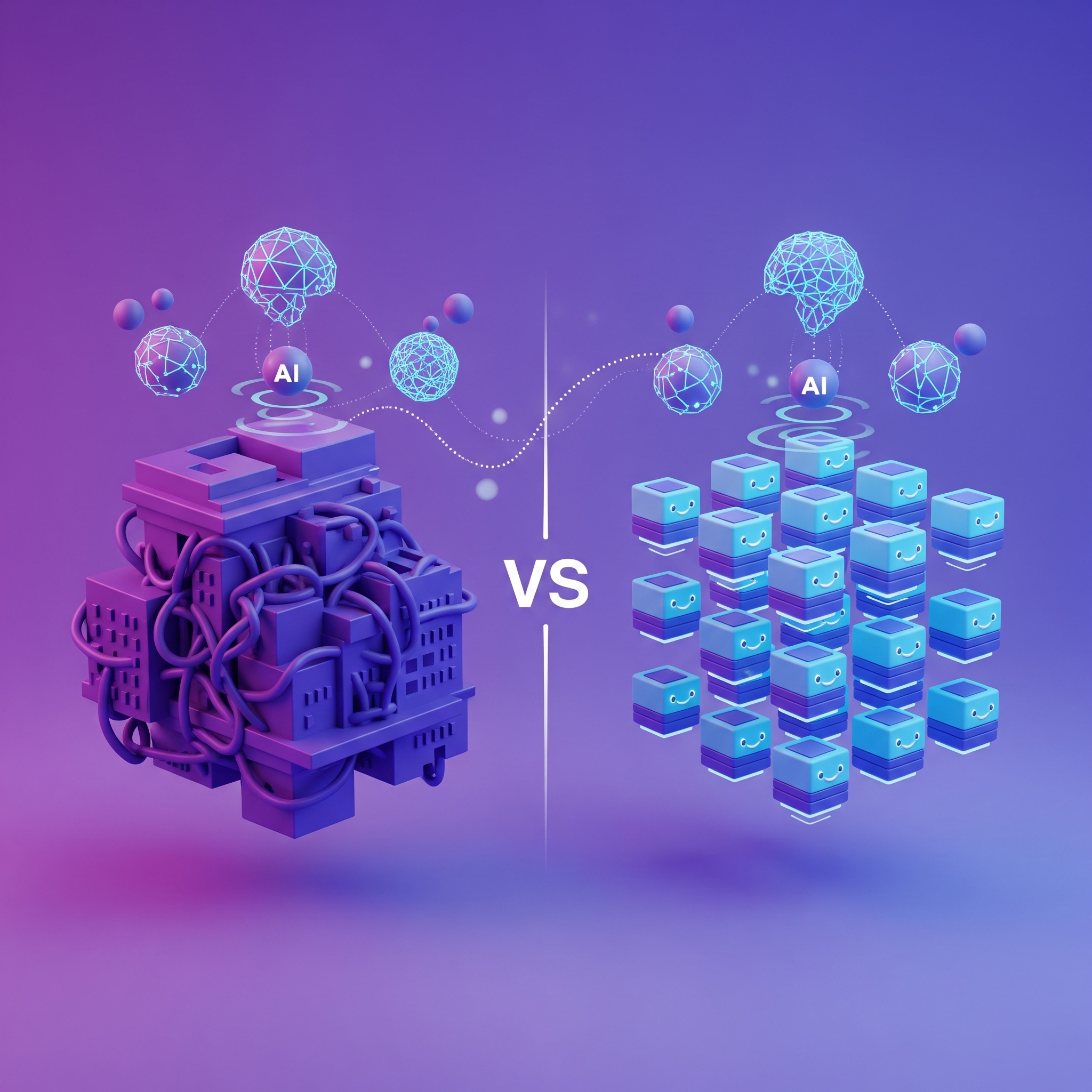

Why LLMs Struggle With Monoliths

LLMs process input as tokens, and use attention mechanisms to assign weight across these tokens. As your codebase grows, so does the number of tokens — often beyond what the model can “meaningfully attend to.”

What happens?

- Dependencies become too distant in token space.

- Signals get lost in noise.

- The model’s attention gets diffused, weakening its ability to recognize relevant context.

- Outputs become blurrier, less confident, and more error-prone.

Long story short: even the smartest model struggles when asked to make changes across a 100k-line codebase full of deeply-coupled logic. It’s like trying to debug a complex system while only being able to see a small window of code at a time — critical dependencies and context get lost outside your field of view.

Why Microservices Make LLMs Shine

Microservices break complex systems into small, purpose-built modules. For LLMs, that’s gold.

Each service becomes:

- A promptable unit

- A testable target

- A contained context window

You can tell an LLM:

“Build a notification service that sends Slack alerts on deploy failures.”

…and it can generate a working service with routes, tests, and infrastructure glue — all without dragging in your entire backend.

Microservices = Better Prompts

The APIs between services act as semantic boundaries that make reasoning easier — for both humans and LLMs. Instead of fuzzy internal function calls, you get explicit interfaces and contracts.

For LLMs, this clarity improves:

- Planning (less ambiguity)

- Generation (cleaner prompts)

- Debugging (smaller scope)

But Didn’t Microservices Burn Us Already?

Yes. Microservices once promised engineering nirvana — and often delivered chaos:

- CI/CD pipelines everywhere

- Observability fatigue

- Three NPM packages to change a button color

But with AI in the mix, microservices are cool again — not because they scale, but because they de-risk co-creation with machines.

LLMs don’t need the entire application — they need well-defined pieces. And microservices deliver exactly that.

The Vibe Stack: How We Make Microservices Not Suck

At PullFlow, we build AI-augmented microservices daily — and we do it without a DevOps nightmare or Kubernetes in local dev. Here’s our actual setup that keeps developer experience smooth and LLMs productive:

- Colima: Lightweight Docker runtime for fast, reliable local containers (especially friendly on macOS).

- Caddy: Smart reverse proxy with automatic HTTPS and per-service routing.

- cloudflared: Secure tunneling to expose local services — ideal for testing webhooks, LLM endpoints, and external integrations.

- NATS + JetStream: High-performance pub/sub system that powers inter-service messaging and async workflows.

- TurboRepo + pnpm: Monorepo tooling with shared packages managed through workspaces and fast, dependency-deduplicated builds.

- PostgreSQL: Primary relational database for most service persistence needs.

- Valkey: High-performance shared cache layer for cross-service data sharing.

- TimescaleDB: Time-series database extension for PostgreSQL, handling metrics and event data.

- Isolated service scaffolding: Each microservice lives in its own code path with dedicated persistent stores.

This setup gives us:

- Predictable, testable dev environments that scale with the team

- Clear interfaces that LLMs can reason about

- Service boundaries that preserve human sanity and AI promptability

Microservices can be fast, modular, and developer-first — if you design for it.

The LLM-Native Stack Is Coming

We’re already seeing a shift:

- LLMs spinning up CRUD APIs from OpenAPI schemas

- Agents orchestrating services via message buses

- Prompts as the new CLI

As AI takes a bigger role in software development, we need architectures that support modularity, autonomy, and safety. Microservices aren’t just back — they might be foundational to the LLM-native dev stack.

At PullFlow…

We’re designing for a future where humans and AI agents build software together. That’s why we’ve embraced microservices — not as a scaling strategy, but as a collaboration protocol.

Want to vibe-code with confidence? Start with good boundaries.