Beyond DORA: Redefining DevOps Metrics in the Age of AI

For the past decade, DORA metrics have been the gold standard for measuring software delivery performance. But as AI agents take on more of the development work, are these metrics still enough?

📊 The Legacy of DORA Metrics: Understanding Our Foundation

The DORA metrics emerged from research as part of the DevOps Research and Assessment team. They studied thousands of organizations to identify what really drives software development performance. Their research established these four metrics as key indicators of DevOps success.

The DORA team created four key metrics that DevOps teams use worldwide:

| Metric | Description |

|---|---|

| Deployment Frequency | How often you release to production |

| Lead Time for Changes | How long it takes to get code from commit to production |

| Change Failure Rate | The percentage of deployments that fail in production |

| Time to Restore Service | How long it takes to fix failures |

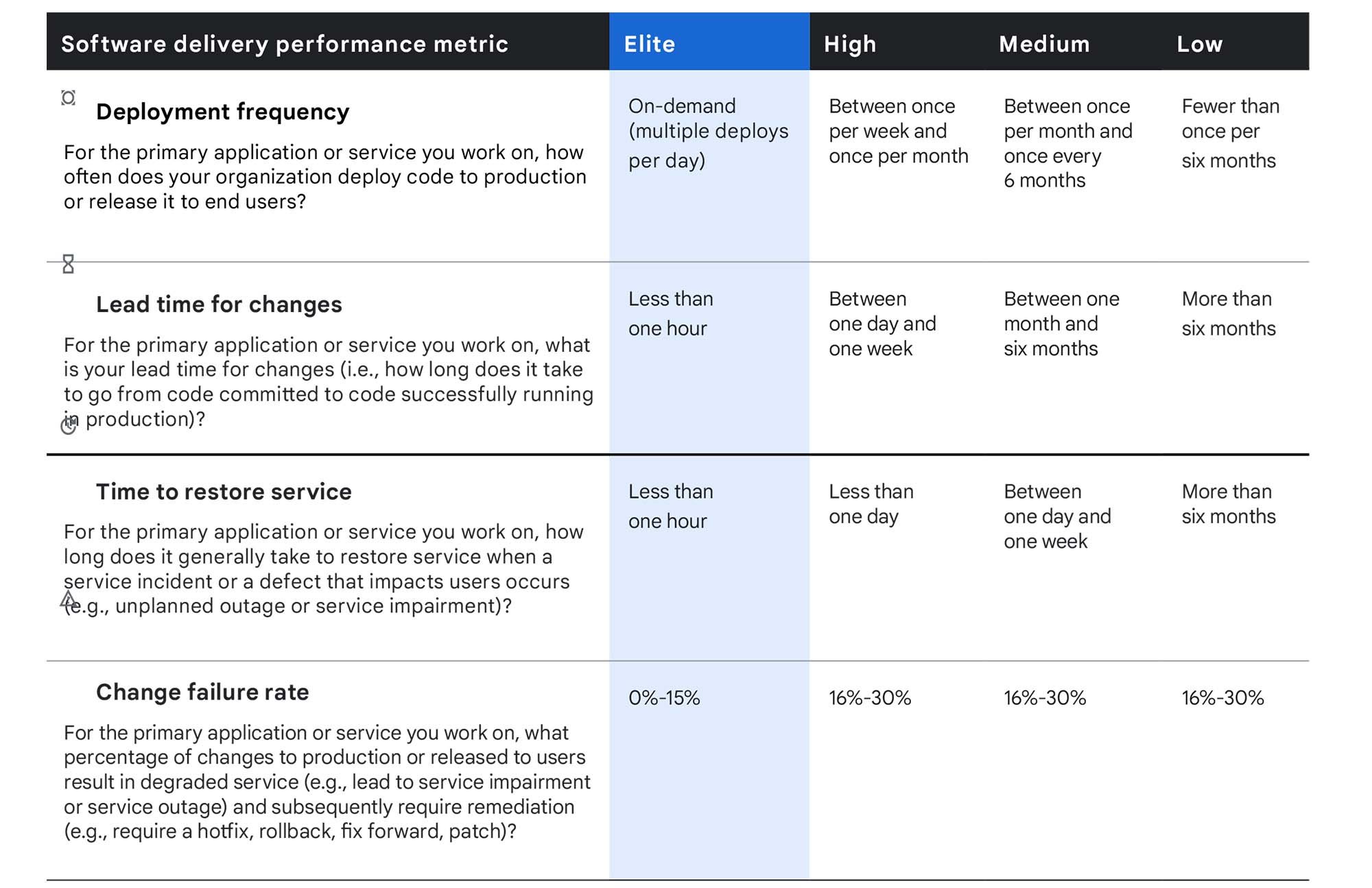

These metrics have helped teams see where they stand, created a common language for DevOps success, and given teams clear targets. According to Google Cloud’s research, companies can be ranked as Elite, High, Medium, or Low performers based on these metrics, giving teams a way to benchmark themselves.

🤖 The AI Revolution in Development: New Paradigms Emerge

AI in development workflows is changing things in ways that DORA metrics don’t fully capture:

- AI agents can write and review code so fast that traditional deployment frequency benchmarks don’t mean as much

- Lead time measurements don’t account for AI’s ability to work on multiple things at once

- It’s hard to know who’s responsible for failures when both humans and AI contribute to code

- Recovery time now depends on both AI diagnostic tools and human intervention

Many teams using AI pair programming tools find that while their DORA metrics get better, these measurements don’t show the real changes in how they work and collaborate.

🔄 The Collaboration Blind Spot: What DORA Doesn’t See

Traditional metrics focus mostly on output and reliability, missing important aspects of modern development:

- How well humans and AI work together

- How decisions get split between developers and AI tools

- The value of human creativity versus AI implementation

- How knowledge and learning spread through AI-assisted teams

The software development lifecycle is changing in big ways. Pull request workflows, review processes, and contribution patterns look very different in teams using AI. This includes:

- Review Turnaround Evolution: AI tools are changing code reviews, giving initial feedback in seconds instead of hours

- Contribution Diversity: Changes in who (or what) writes code, with some teams having a huge amount of their PRs handled by automated systems

- Quality Control Distribution: How review responsibilities shift between humans and AI, creating new ways to work where humans focus on big-picture decisions

- Wait Time Reduction: New ways to cut down delays between PR creation and first feedback, often using AI assistants

The best teams aren’t just trying to deploy faster. They’re rethinking how they work by combining human creativity with AI efficiency. Current metrics don’t show these changes or give visibility into these new practices.

When AI handles routine tasks, measuring only deployment speed doesn’t show the real value being created.

🚀 Decision Quality Over Deployment Quantity: A Shift in Focus

As AI systems handle more implementation details, humans are focusing more on higher-level decisions:

- Strategic architecture choices that guide AI implementation

- Creative problem framing that AI tools then solve

- Cross-functional collaboration that connects business needs with technical solutions

- Ethical oversight and quality checks of AI-generated code

These activities need new metrics that measure not just how fast code ships, but how well the human-AI partnership delivers real business value.

📈 The New Metrics Landscape: Measuring What Matters

To fully understand performance in the AI age, we need metrics that add to DORA by measuring:

- 👥 Contributor Dynamics: The balance of work between humans and AI, including which tasks each handles best

- ⚡ Review Efficiency: How quickly feedback happens on code changes, from both AI systems and human reviewers

- 🔄 Decision Flow Visibility: Visual maps showing how work moves through review stages, finding bottlenecks

- ⏱️ Wait Time Analysis: Detailed breakdowns of where time gets lost, separating human and automated stages

- 🤖 Automation Impact: Tracking how many routine tasks AI handles and how this frees up engineers for creative work

These metrics look beyond speed and reliability to measure the quality of teamwork and decision-making in human-AI teams.

The Path Forward: Evolution, Not Replacement

DORA metrics aren’t wrong - they’re just not complete for this new era. Instead of abandoning these proven measurements, smart organizations are adding collaboration-focused metrics that capture how development work is changing.

The most successful teams find that while DORA metrics are still useful indicators of operational performance, they need more ways to understand how effectively their developers are working with AI.

By measuring both delivery speed and collaboration quality, organizations can make sure they’re optimizing for what really matters: creating maximum value through the unique combination of human creativity and AI capabilities.

As we continue exploring AI-augmented development, our measurement systems need to evolve along with our tools and practices. The teams that succeed won’t just be those with the fastest deployments, but those that best combine human insight with AI efficiency to solve real problems.

Check out PullFlow for tools to help teams measure and improve these collaborative metrics in AI-augmented development environments.