Can We Measure Software Collaboration? Introducing Collab.dev

Traditional DORA metrics miss the mark when it comes to capturing the real impact of software projects and their communities. Collab.dev offers a new way to measure and improve collaboration in open source.

Today, we’re launching Collab.dev — an open source project and public resource that provides visibility into collaboration metrics across 250+ popular open source projects. Collab.dev transforms raw pull request events into insightful visuals, helping open source communities and contributors understand and improve the metrics that drive innovation and long-term success.

Whether you’re looking to analyze how established projects collaborate or measure your own project’s performance with self-hosted metrics, Collab.dev makes it easy to unlock actionable insights.

Introduction

Open source projects succeed when collaboration works well. Generally, the open source community evaluates projects using stars and forks, metrics that unfortunately don’t measure collaboration quality.

At PullFlow, we’ve enhanced collaboration across hundreds of teams from WordPress to Epic Games. Our data consistently shows that effective collaboration directly improves development velocity, code quality, and innovation.

That’s why we built Collab.dev. It measures what matters in open source: PR workflows, review processes, contribution patterns, and automation usage. By analyzing these factors, we can identify what makes projects successful beyond popularity metrics.

The Measurement Opportunity

The open source ecosystem has traditionally relied on metrics like stars, forks, and download counts when discovering and understanding projects. While these popularity indicators are valuable, they tell only part of the story; they don’t capture the collaboration dynamics that shape how projects evolve and operate day-to-day.

What drives open source success is the quality of collaboration - both between human contributors and increasingly between humans and AI agents. The responsiveness to new ideas, thoroughness of code reviews, diversity of the contributor base, and efficiency of workflows all matter. These collaborative elements enhance a project’s resilience and pace of innovation.

Today’s open source landscape includes a growing ecosystem of bots and AI agents handling everything from dependency updates to code generation. Understanding this hybrid collaboration model provides crucial context that popularity metrics alone can’t capture.

Our Collaboration Measurement Journey

Our quest to measure collaboration began with a simple observation: the most successful teams weren’t those with the most developers or commits, but rather the ones that collaborated most effectively.

At PullFlow, our data analysis of 200,000+ pull requests across 12+ industries revealed that collaboration models dramatically impact outcomes regardless of team size or technology stack. Teams that unified communication channels saw up to a 4x reduction in wait times, while clear review processes consistently attracted more sustained community engagement.

Key Finding: The most effective teams we studied spent 62% less time waiting for feedback and merged code 3x faster than teams with similar technical skills but fragmented collaboration practices.

These insights from our work revealed an opportunity for the broader open source community. We developed a framework that looks beyond individual actions like commits and comments to measure the interconnected system of collaboration that makes open source work. Instead of just counting activities, we analyze how PRs flow through systems, how contributors engage with each other, and how automation enhances human workflows.

What Collab.dev Measures

Each project page in Collab.dev has an intuitive dashboard of key metrics:

Contributor Dynamics

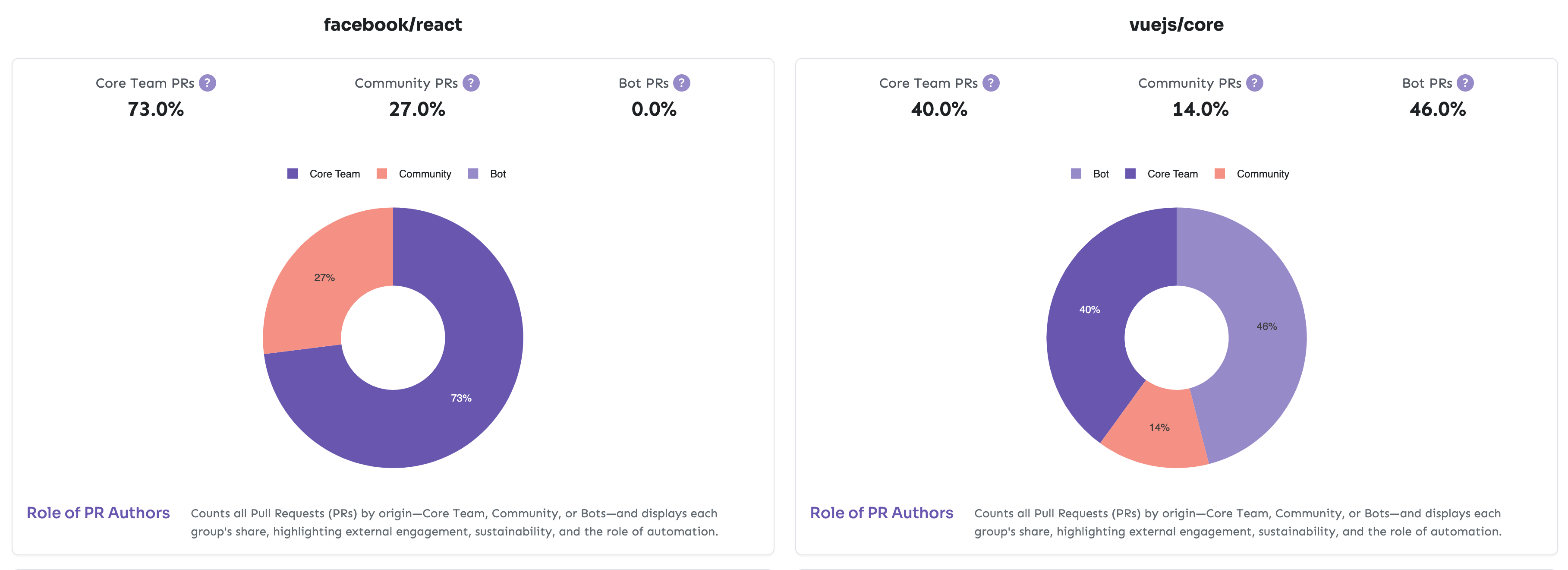

Analysis of PR sources - whether from core team members, community contributors, or automated systems - revealing each project’s unique collaboration model and engagement patterns. Our contributor distribution chart clearly shows the proportion of PRs created by core team members and bots, with the remainder representing community contributions. We track the balance between human and AI agent contributions, showing which bots are most active and how automation is reshaping development workflows.

Surprising Trend: Some of the most community-friendly projects have bot contribution rates approaching 40%, handling routine tasks that would otherwise create maintainer burnout.

Review Process & Quality

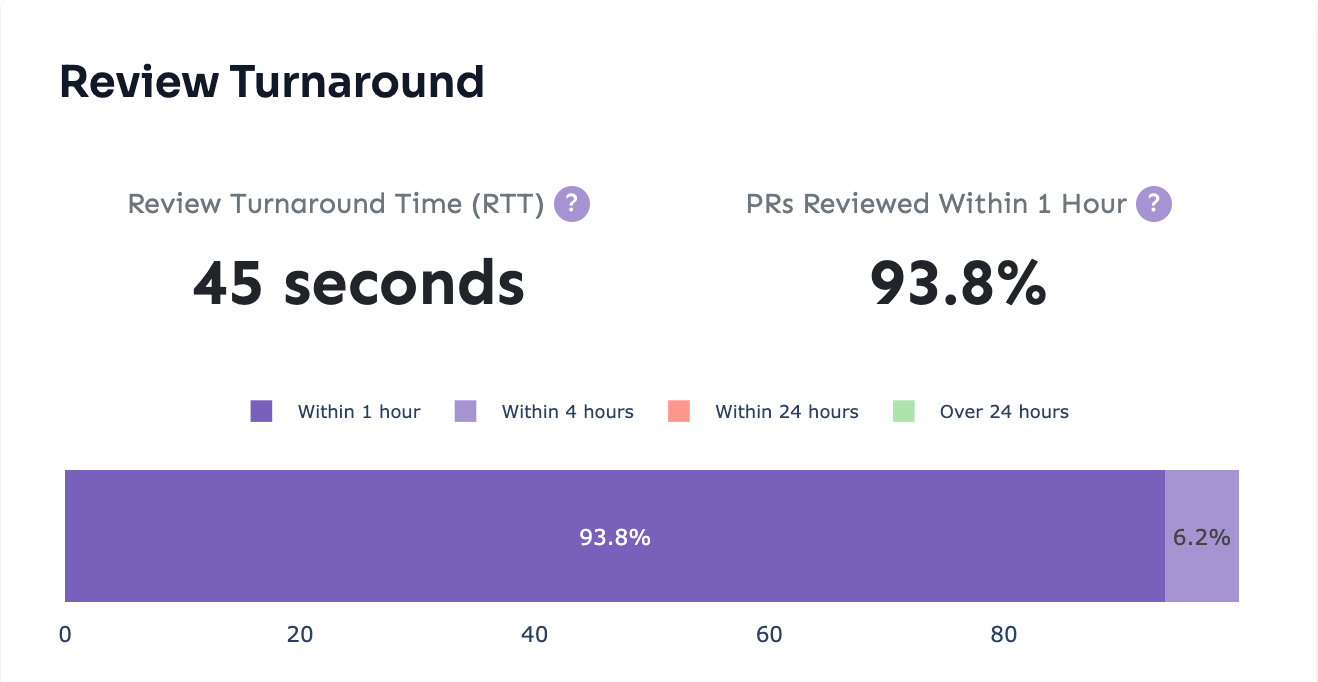

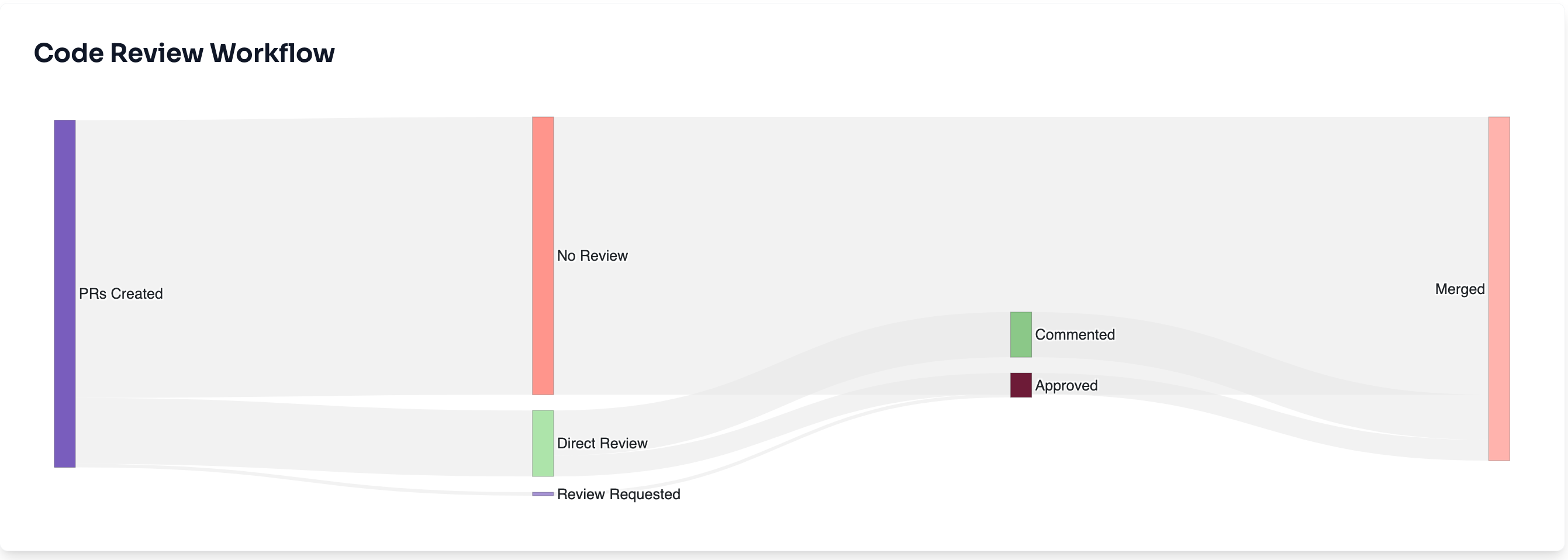

Review funnel visualizations showing how PRs progress through the system, highlighting each project’s commitment to collaborative quality practices. We measure what percentage of merged PRs received proper reviews and track how quickly PRs receive their first review, providing insight into a project’s responsiveness and quality control mechanisms.

Time & Efficiency Metrics

Comprehensive analysis of the PR lifecycle, including approval time (how PR size affects review speed), merge time distributions, and detailed wait time breakdowns. These metrics pinpoint specific delays in the collaboration process, reveal workflow bottlenecks, and help teams optimize their developer experience.

Collaboration Flow Visualization

Interactive visualizations of the actual paths PRs take through review and approval stages, helping teams identify process inefficiencies and collaboration gaps. These flow diagrams provide a holistic view of how work moves through the system and where opportunities for improvement exist.

Analyze Your Own Repositories

Don’t see projects you’d be interested in? Ready to see these collaboration metrics for projects you care about? Collab.dev makes it easy to gain insights into both public and private repositories:

For Public Repositories

Have a favorite open source project that’s not yet on Collab.dev? You can request metrics for any public GitHub repository:

- Sign with GitHub

- Enter the repository URL (e.g., github.com/your-favorite/project)

- View the metrics of the given repository

- Share these insights with your team or the broader community using the built-in sharing tools

- Save other repositories to your favorites for quick access to metrics you reference frequently

Community Contribution: Each public repository added enriches our collective understanding of open source collaboration patterns. Your requests help build a more comprehensive picture of what works across different project types and communities.

Why These Metrics Matter to Developers

Collaboration metrics provide concrete benefits beyond traditional popularity indicators:

Review turnaround and community engagement patterns offer visibility into project operations, helping set realistic expectations about response times and revealing how contributions are distributed between core teams and community members.

Historical data on review coverage, wait times, and merge processes provides insights into workflow efficiency and project health that activity graphs alone don’t capture. For potential contributors, these metrics can indicate which projects have review processes that align with their expectations.

Collaboration metrics complement technical evaluations, allowing developers to consider project sustainability and community health alongside feature requirements when making technology decisions.

Benefits for Different Stakeholders

Collaboration metrics provide unique value to everyone in the open source ecosystem, regardless of their role.

Benefits for Open Source Maintainers and Project Leaders

Maintainers gain visibility into their community engagement patterns, helping identify bottlenecks in review processes and opportunities to improve contributor experiences. These metrics highlight where time is spent in the PR lifecycle, enabling teams to optimize workflows and reduce friction points that may discourage contributions.

Benefits for Contributors and Community Participants

Before investing time in a project, contributors can evaluate responsiveness and review patterns. Understanding typical review turnaround times and approval processes helps set realistic expectations and identify projects where contributions are likely to receive timely feedback, potentially leading to more productive and satisfying contribution experiences.

Benefits for Organizations and Enterprise Users

Organizations can use collaboration metrics to assess project sustainability beyond commit frequency. Active review processes and balanced contributor distributions often indicate healthy projects with lower abandonment risk, helping teams make more informed decisions about technology adoption.

By providing transparency into these previously hidden aspects of project health, collaboration metrics foster a more informed and efficient open source ecosystem where expectations align with reality and resources flow to projects with sustainable practices.

Early Observations from Popular Projects

Our analysis of over 250 popular open source JavaScript/TypeScript projects has revealed fascinating patterns that showcase the incredible diversity and ingenuity of open source communities. We’re grateful to the thousands of volunteers whose contributions made this analysis possible.

These observations represent just the beginning of our research. We’ll be sharing more detailed analyses, trends, and insights through our newsletter as we continue to explore the data.

Diverse Community Structures That Work

We’ve observed that successful projects thrive with different community structures. Some, like React, benefit from a concentrated contribution pattern where a small group of core contributors make the majority of PRs, while others like Vue flourish with a more distributed model where contributions come from a broader community base.

The Growing Role of Automation & AI Agents

The open source community has been at the forefront of embracing automation to enhance human collaboration. Projects like Next.js thoughtfully integrate bots for nearly 30% of their PRs, handling routine tasks from dependency updates to documentation fixes. This innovative use of automation allows human contributors to focus on more creative and complex work.

Future Trend: Our data suggests that by 2026, over 50% of PRs in popular repositories will involve some form of AI assistance, either through automated PR creation or AI-assisted code review.

Thoughtful Process Design

Many projects have developed remarkably effective review processes through community experimentation and iteration. Projects with clearly defined contribution guidelines often achieve up to 3x faster review turnaround times. Svelte’s community, for example, has created a streamlined review process resulting in median first-review times of under 24 hours despite a relatively small core team.

Smart Time Investment

Open source maintainers have developed creative solutions to maximize their limited volunteer time. Our analysis shows that projects focusing on reducing the time between PR creation and first review see the greatest overall efficiency gains. Communities that have implemented even simple automated triage systems significantly improve contributor experiences.

Current Status and Future Plans

The Collab.dev project is open source on GitHub. It currently tracks 250+ JavaScript and TypeScript projects from NPM. Here’s our roadmap for expanding the platform:

🚀 Available Now

- Core Platform: 250+ JavaScript/TypeScript projects with complete collaboration metrics

- Community Feedback: Actively gathering insights to guide our next development priorities

- User-Contributed Projects: Allowing users to add any public open source project for analysis

🔮 Coming Soon

- Private Repositories: Analyze your own private repositories with enhanced team collaboration metrics

- Ecosystem Expansion: Adding Python, Rust, and Go projects

- Historical Trends: Tracking how collaboration patterns evolve over time

- Advanced Analytics: AI-powered insights and recommendations

- Comparison Tools: Enhanced features to evaluate projects across multiple dimensions

Get Involved: Want to see your favorite project or language added sooner? Let us know what you’d like to see next!

Join the Conversation

For Collab.dev, this is just the beginning of a broader conversation about measuring what matters in open source collaboration. We believe that by making these metrics visible, we can collectively improve how we work together.

Community Impact: When projects implement clear collaboration practices based on metrics, we’ve seen average first-time contributor retention increase by 47%.

We invite you to:

- Measure your public repository and see your community’s collaboration metrics in action

- Explore the data at Collab.dev and discover insights about projects you care about

- Join the discussion in our forum where we’re developing these metrics in the open

- Subscribe for regular updates on new insights, data visualizations, collaboration trends, and product features

Our newsletter will feature ongoing analysis of collaboration patterns across the open source ecosystem, deep dives into specific projects, and early access to new metrics and features as we develop them. We’re committed to sharing what we learn about effective collaboration practices with the broader community.

For maintainers interested in applying these insights to improve their own workflows, or for organizations looking to enhance their internal collaboration, the full PullFlow platform offers additional tools and customized analysis.

Visit Collab.dev today to start exploring collaboration metrics for your favorite projects.

For teams looking to improve their own code review collaboration workflows, learn more about our GitHub + Slack + VS Code unified collaboration platform at PullFlow.com.